Overview

Text Entry Augmentation (TEA) is a UXR & UXD project that explores how multilingual researchers interact with generative AI tools like ChatGPT for academic writing. Through mixed-method research with multilingual participants, our team uncovered how language barriers impact trust and accuracy. The resulting low-fidelity prototype reimagines text entry as a bilingual, confidence-building interface that supports seamless switching, verification, and equitable communication across languages.

Problem

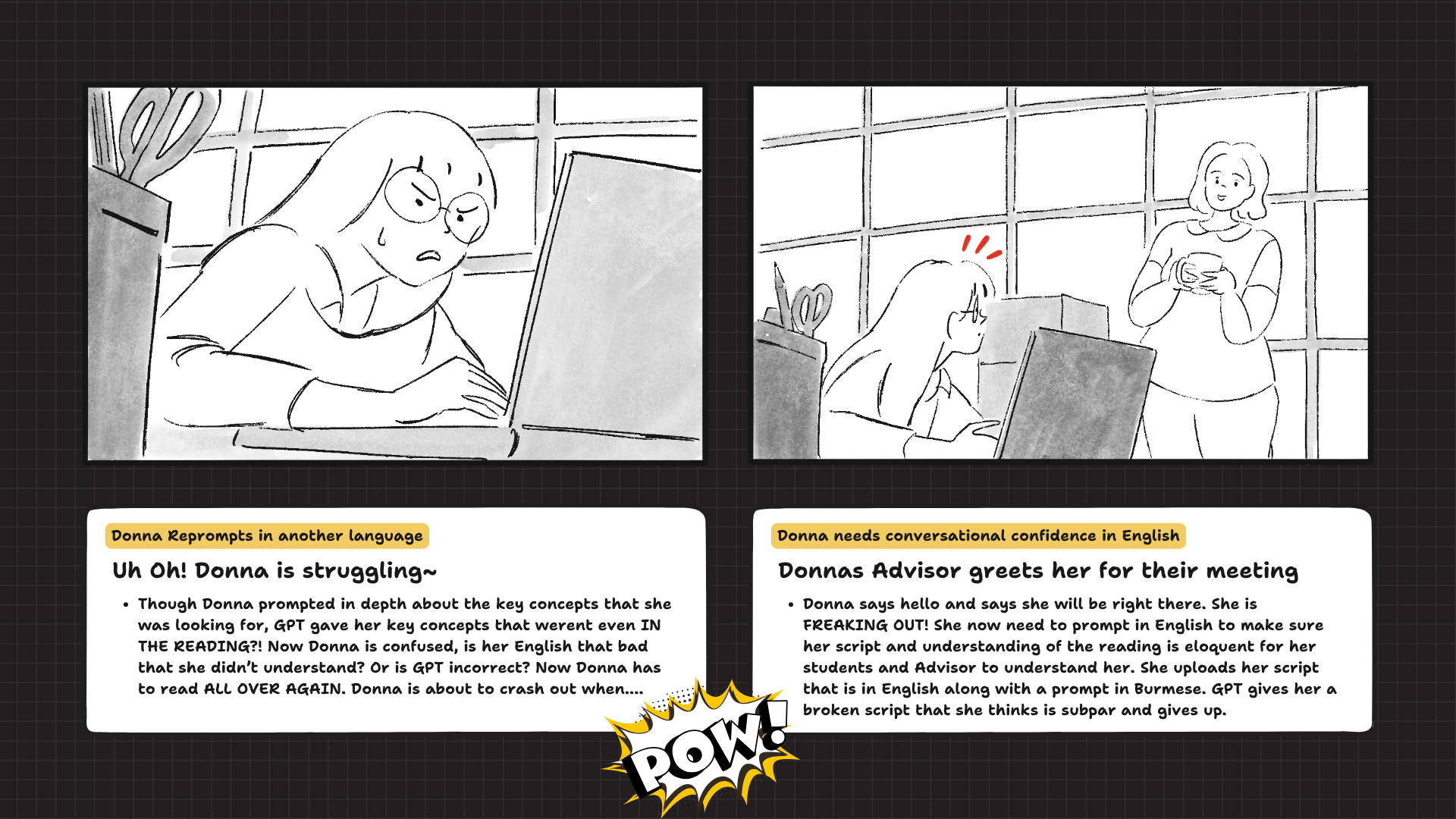

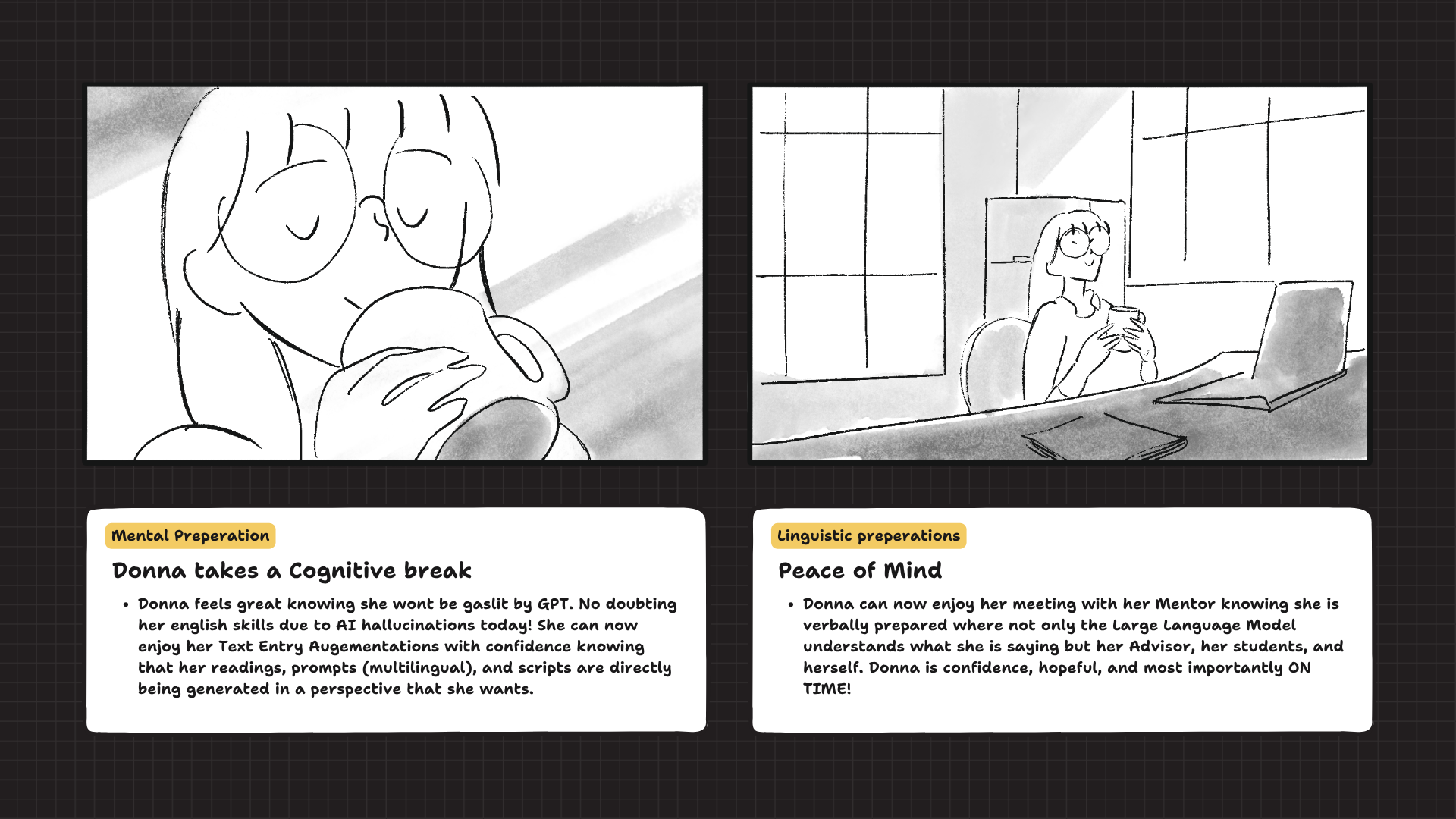

Multilingual researchers often struggle to express nuanced academic ideas through English-dominant AI systems. When switching between languages to clarify meaning, they experience mistranslations, hallucinations, and uneven tone or conceptual depth. These breakdowns reduce trust, increase cognitive load, and discourage the use of native languages — reinforcing inequities in global research communication.

Tools

Figma, Canva, Adobe Suit, Qualtrics, Miro, Overleaf

Timeline

Fall 2025

My Role

UX Researcher & Designer

Led contextual inquiries and affinity mapping.

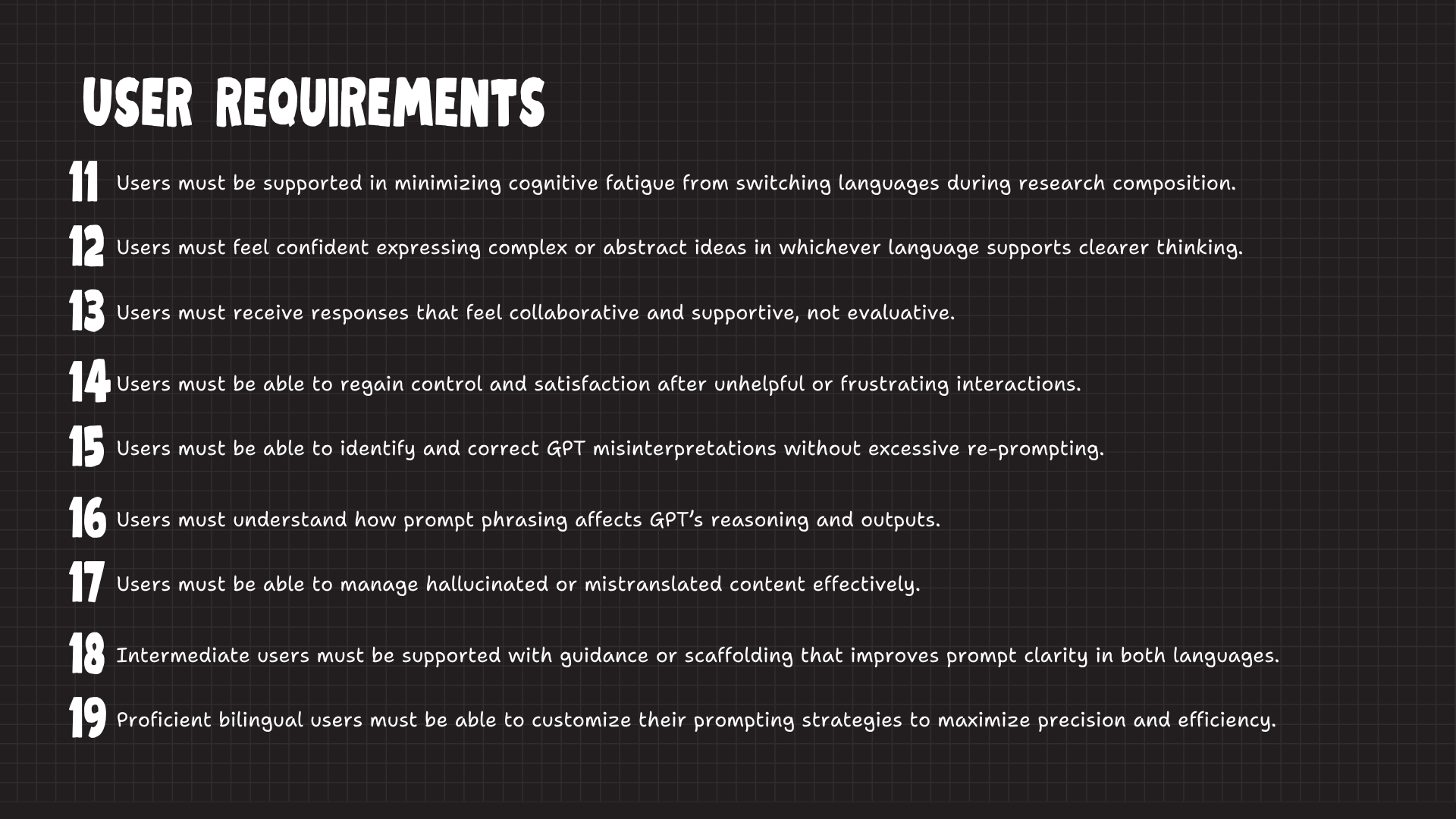

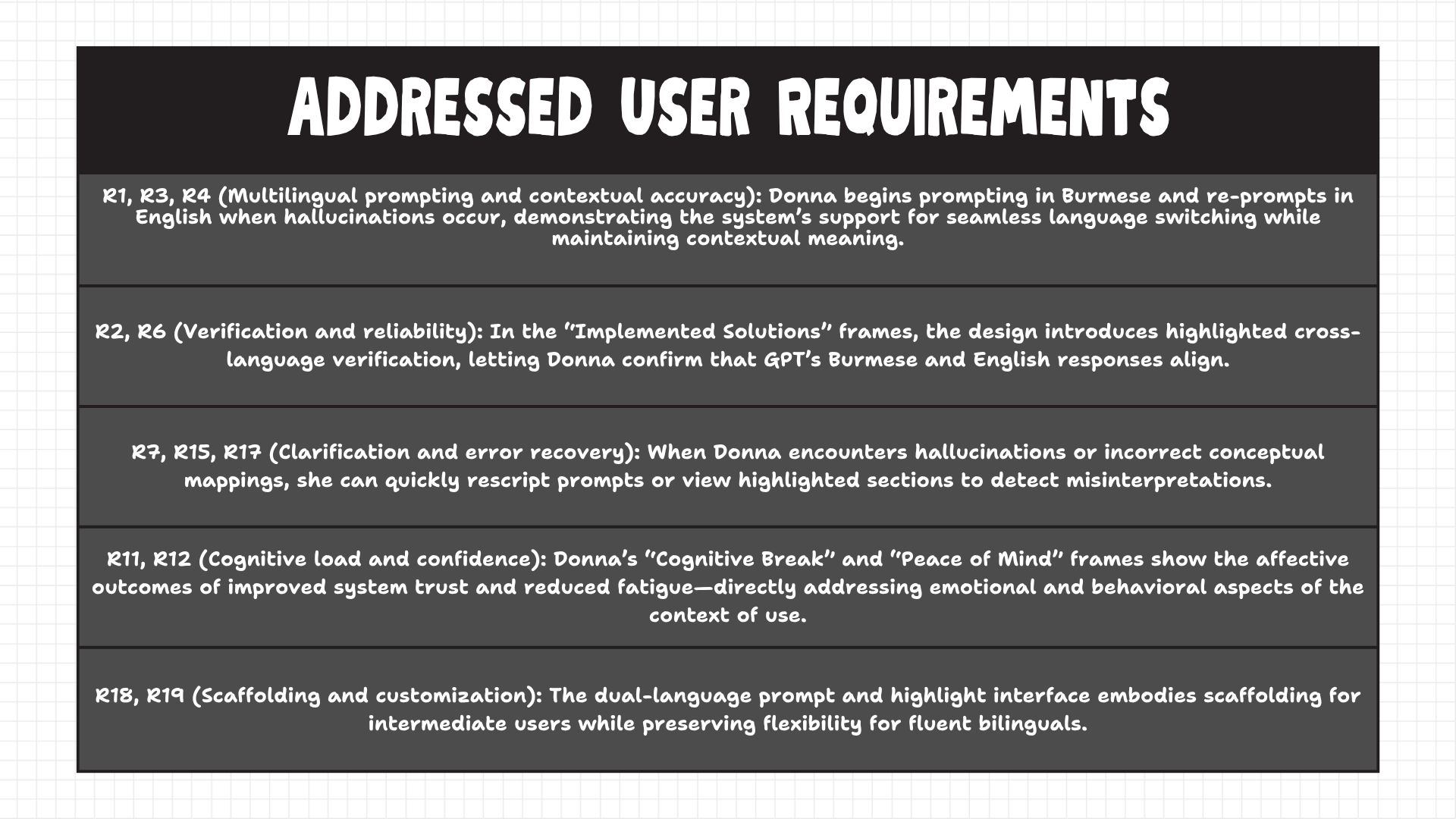

Synthesized breakdowns into actionable design requirements.

Co-authored & structured user requirements

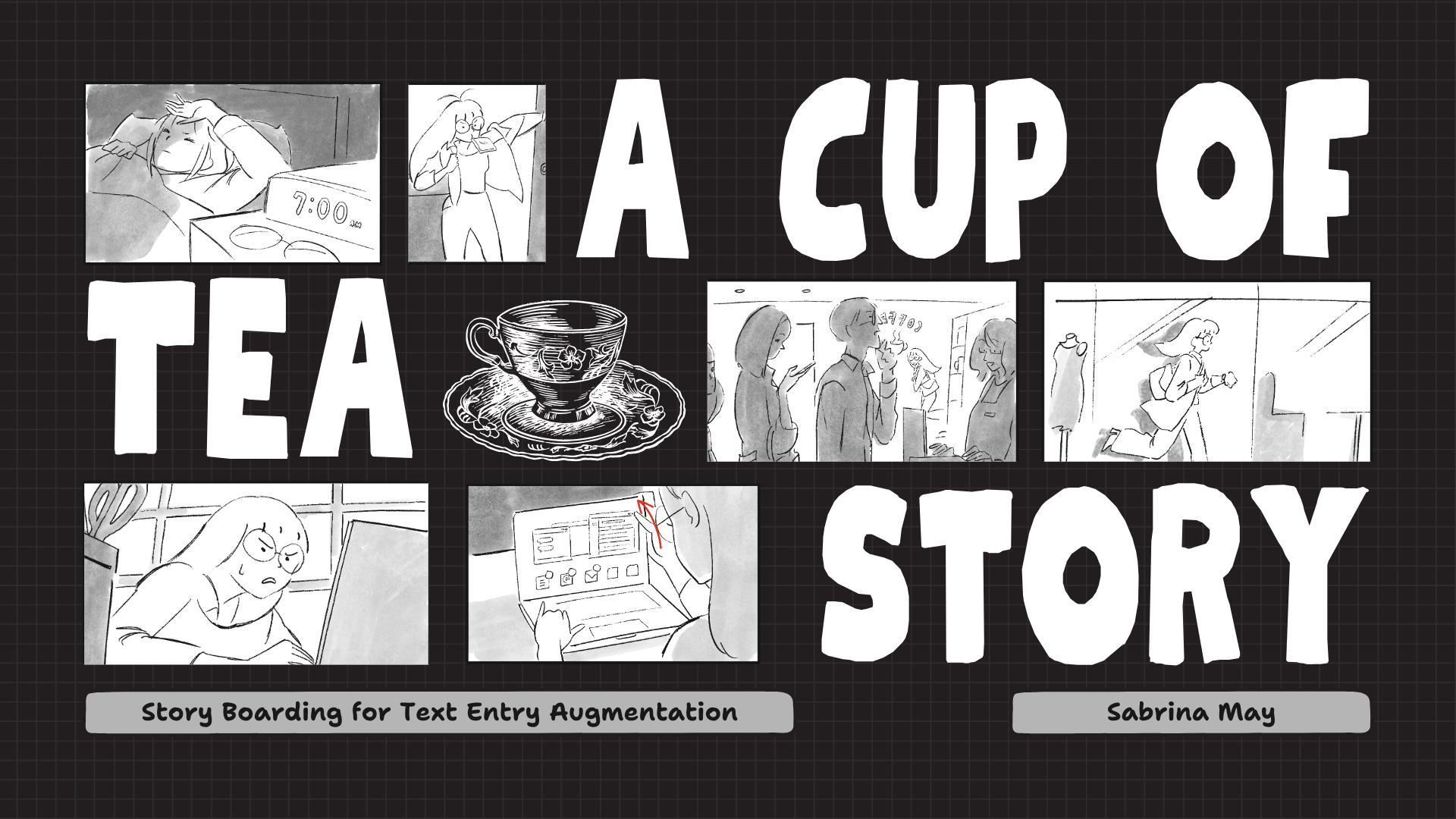

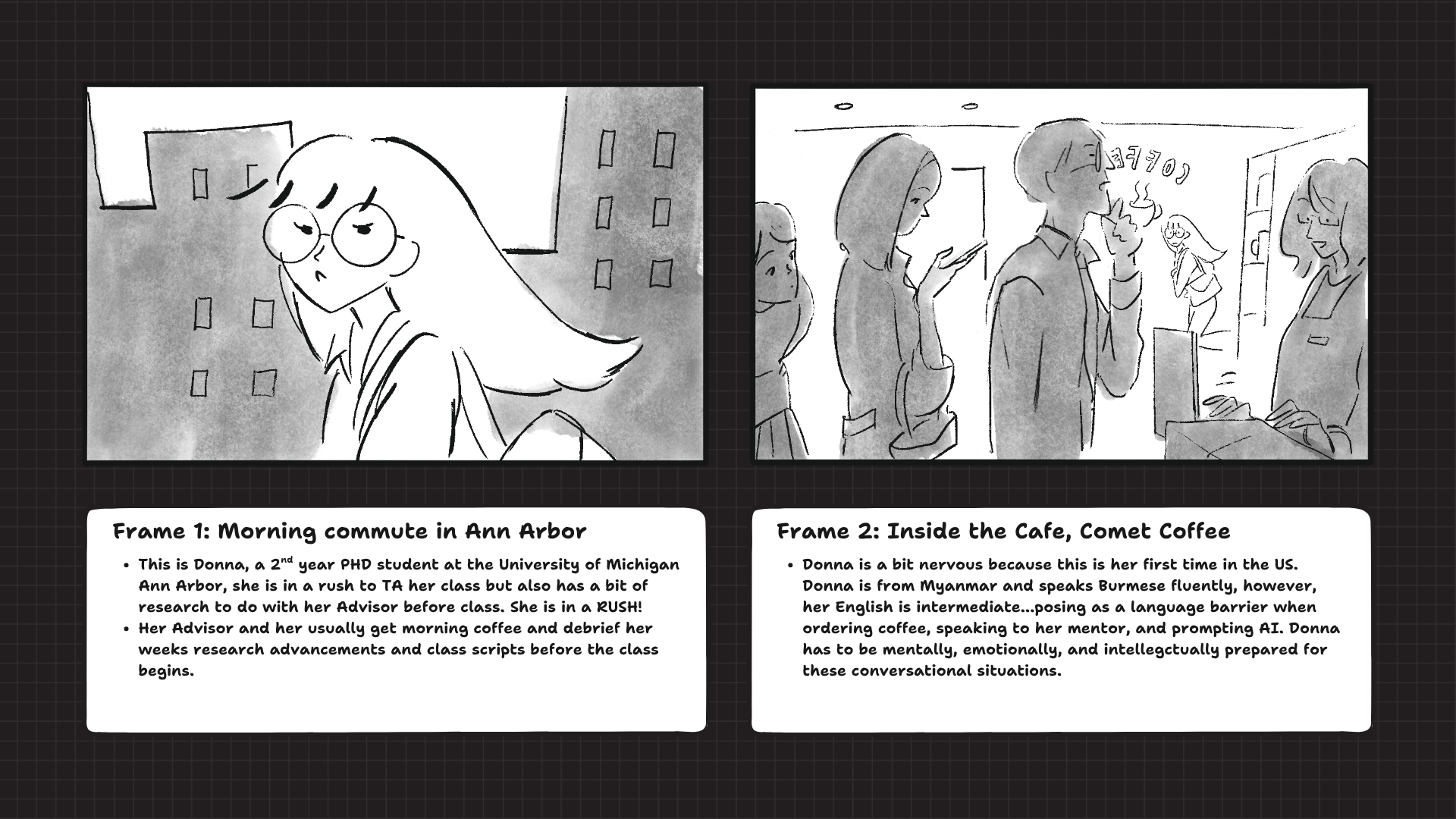

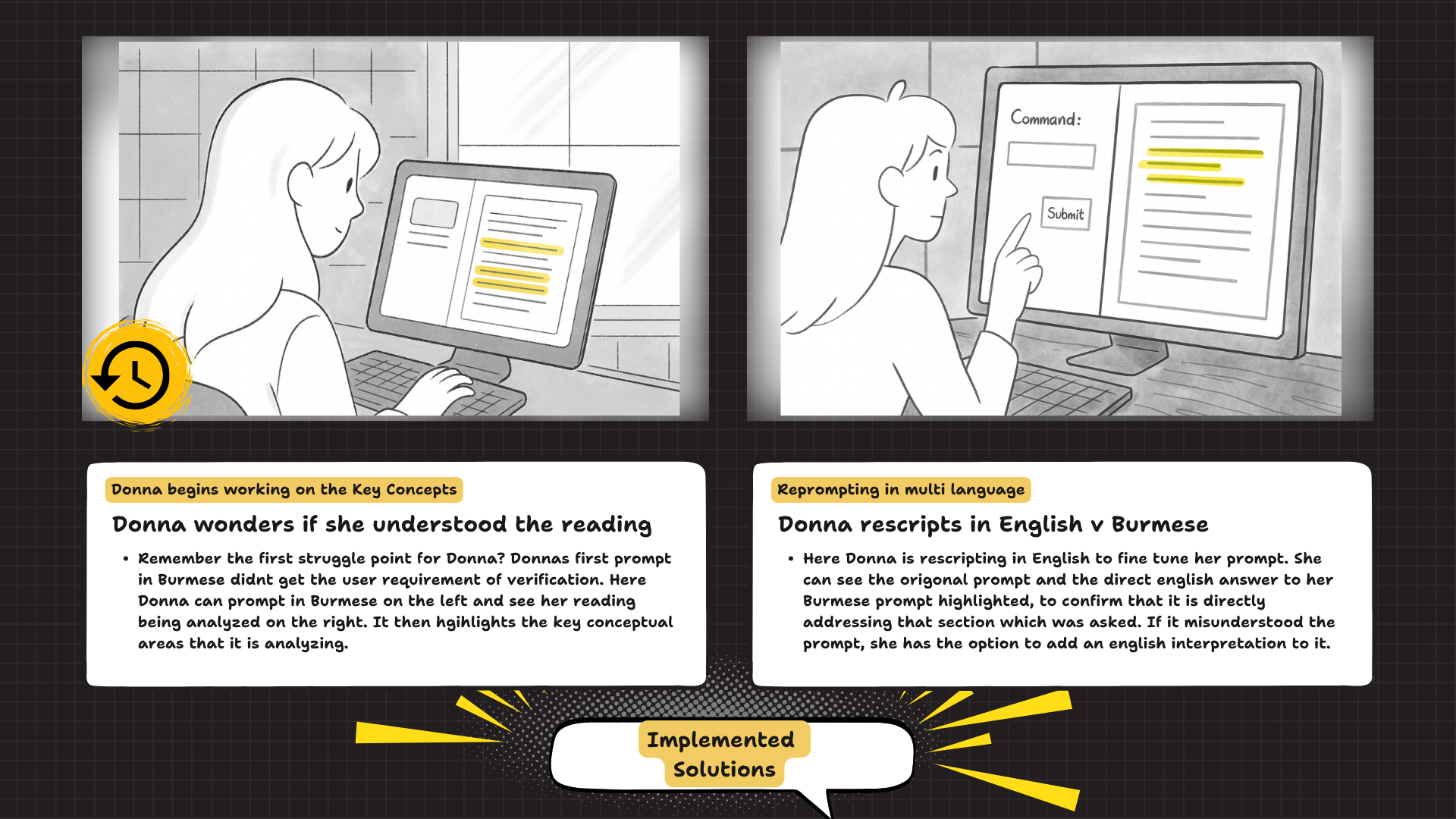

Contributed to persona and storyboarding for multilingual researcher scenarios.

Team

Advisor

Dr. Nikoli Banovic

Additional HCI Researchers

Marko Veljanovski, Nina Lei, Ishika Joshi, and Linea

How do multilingual researchers use generative AI to read, write, and translate academic texts?

What cognitive and linguistic challenges arise when switching between English and native languages?

How can interface design better support bilingual prompting and trust during AI-assisted research?

Mixed Method Studies

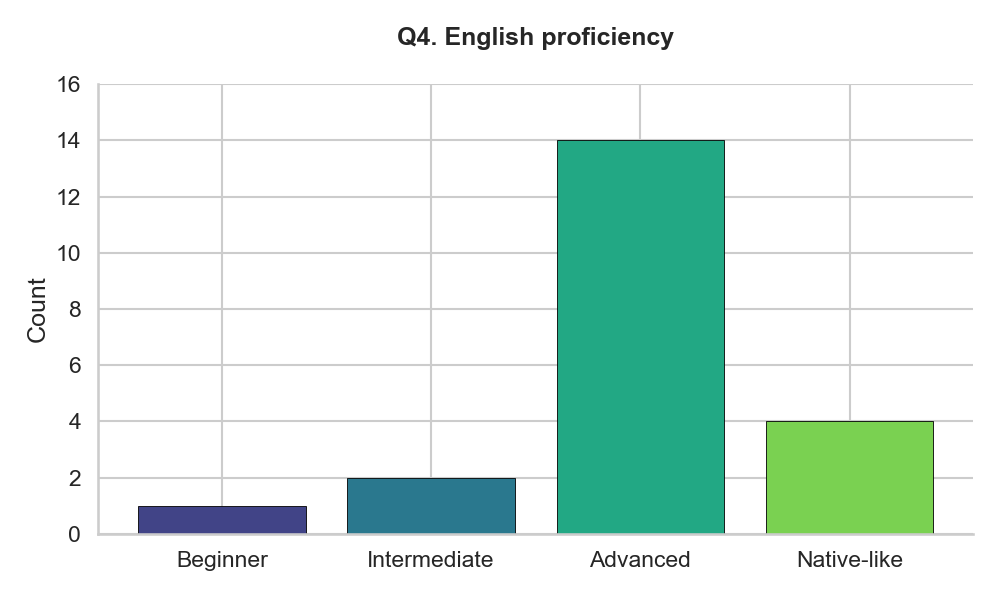

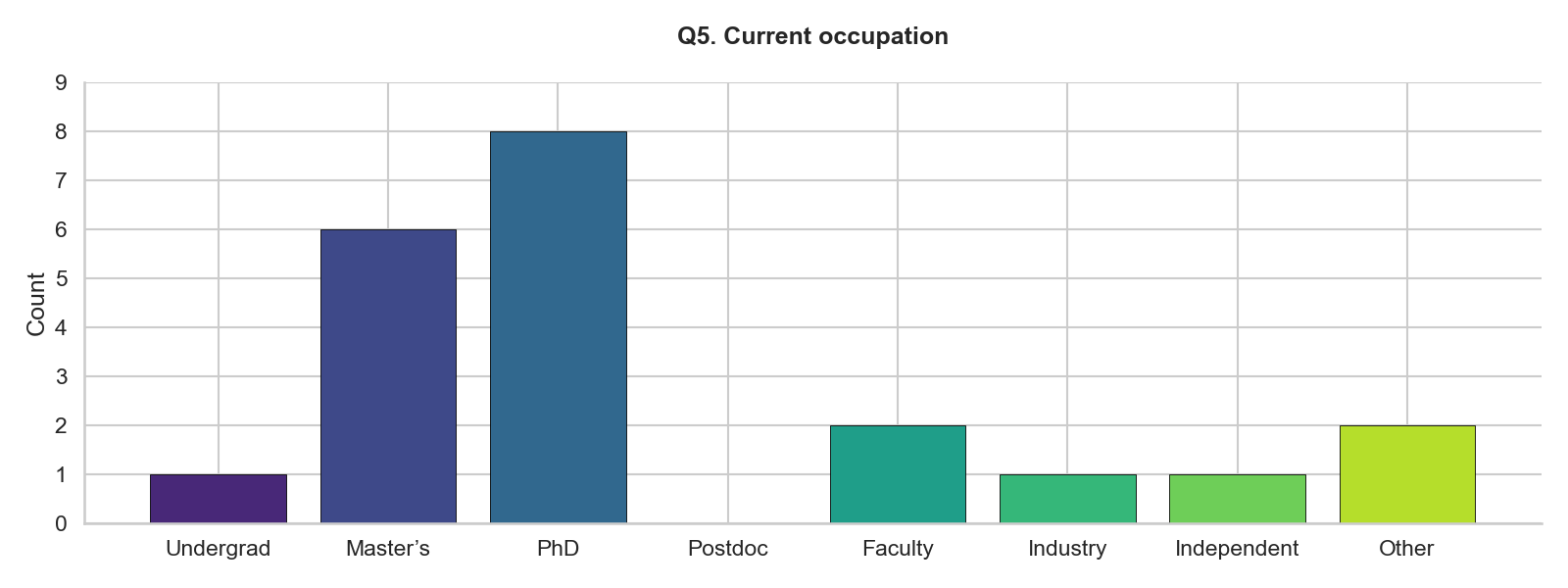

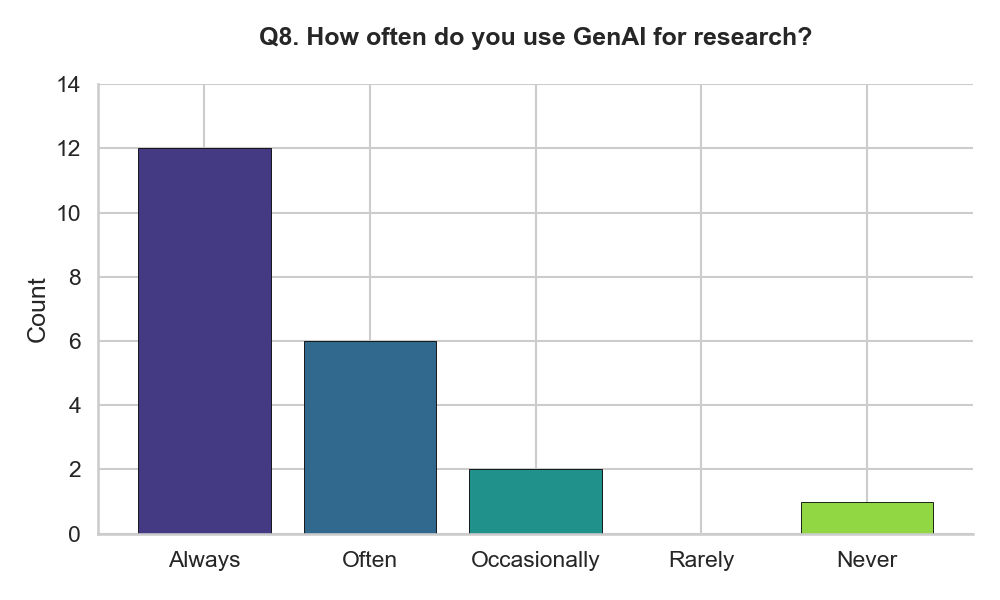

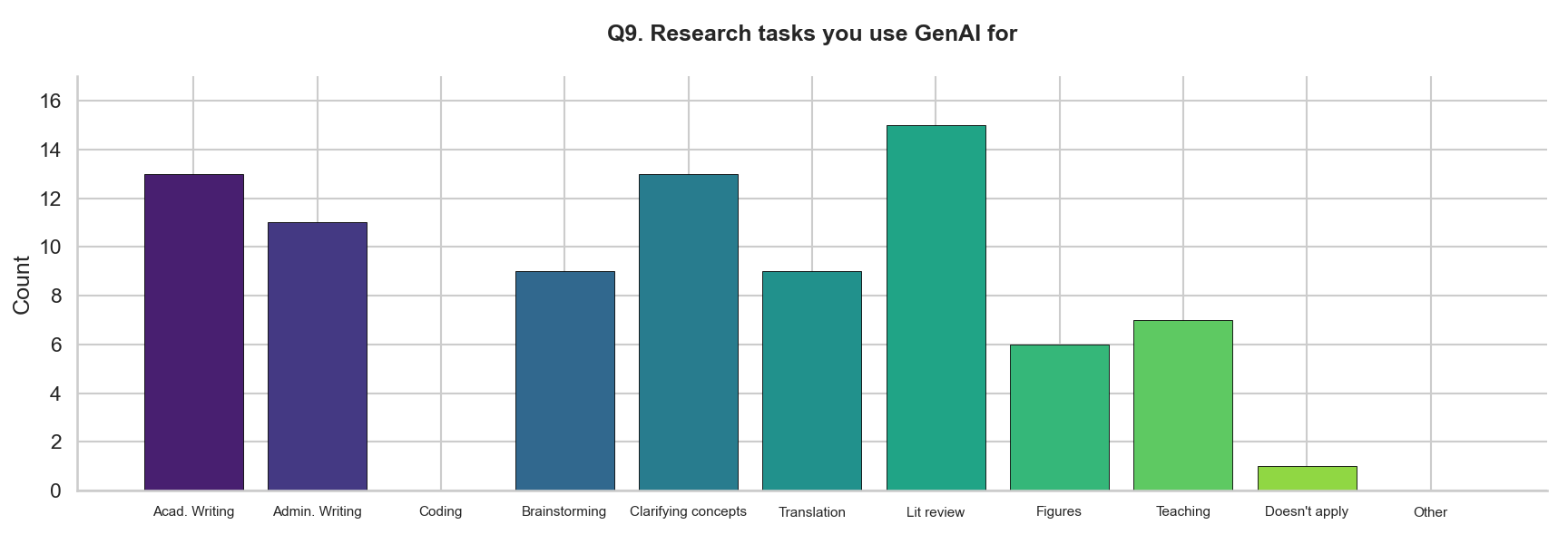

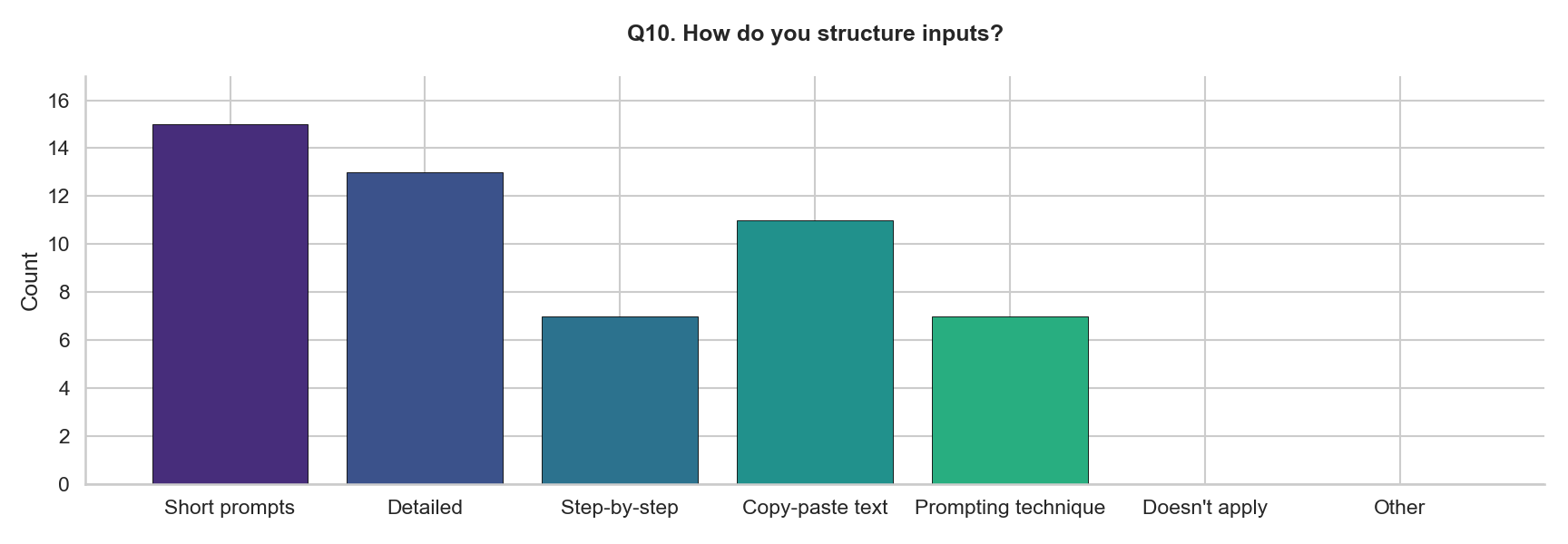

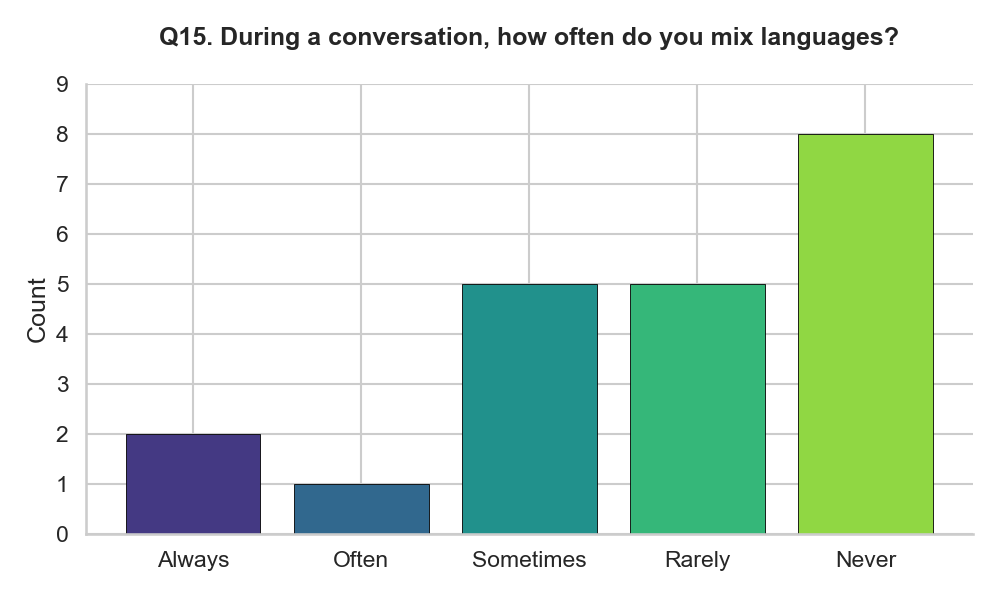

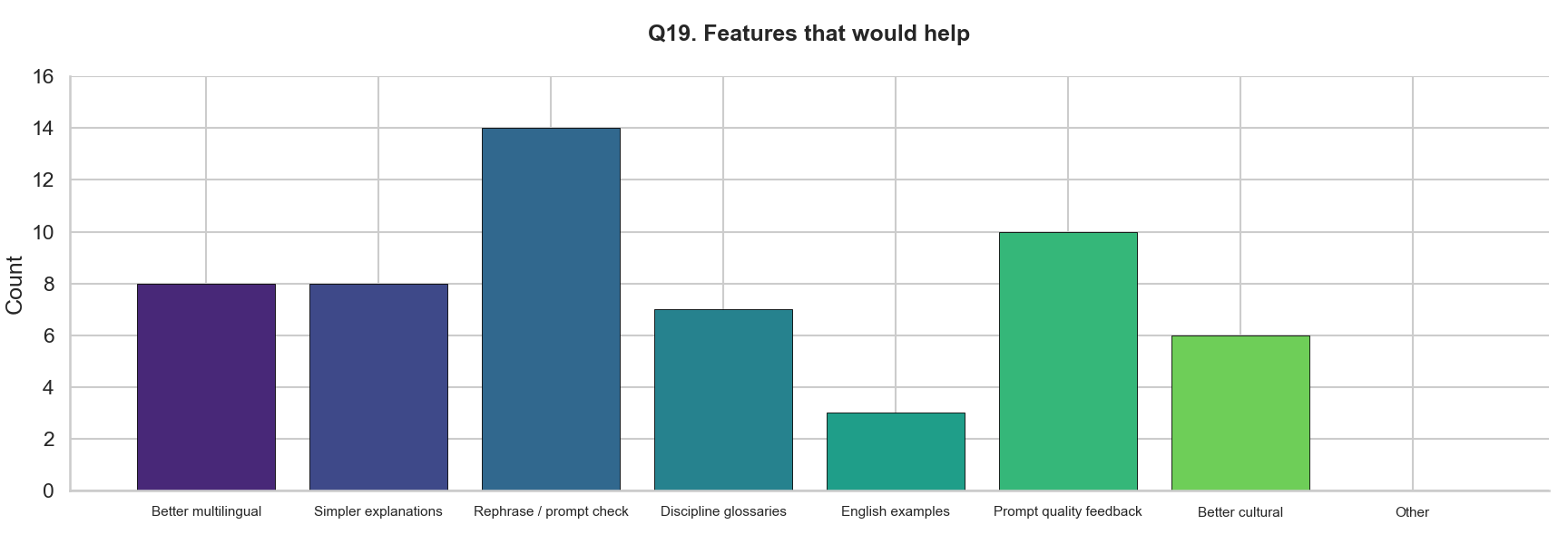

Survey (n=24)

Quantified multilingual AI use patterns, challenges, and coping strategies.

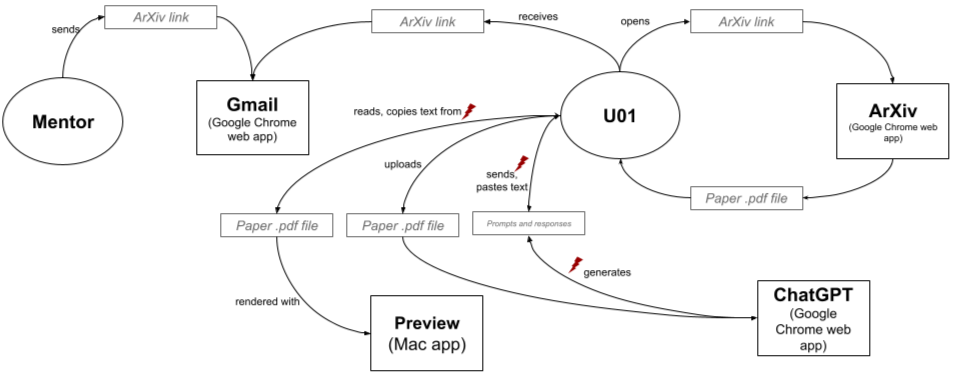

Contextual Inquiries (n=5)

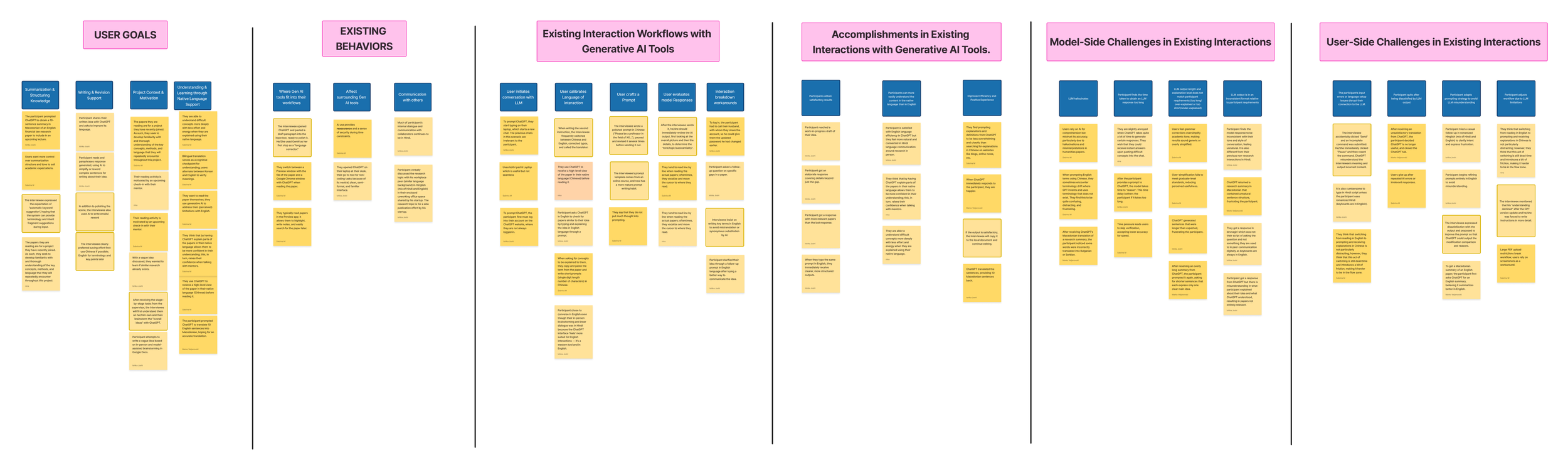

Quantified multilingual AI use patterns, challenges, and coping strategies. Results were tracked in an affinity diagram.

Affinity Mapping & Flow Analysis

Identified recurring breakdowns and user adaptations across participants.

Key Findings

1. English Bias in Interaction: 80% of multilingual users avoid using native languages with AI due to lower accuracy and poor translation handling.

2. Trust and Verification Loops: Participants frequently double-check AI outputs with original sources or manually translate key terms.

3. Cognitive Overhead in Language Switching: Switching between languages interrupts workflow and increases fatigue.

4. Adaptive Workarounds: Users develop strategies like bilingual scaffolding, example-based re-prompting, and simplified phrasing to regain control.

Design Implications

Fluid Language Switching: Enable seamless transitions between languages within the same prompt.

Transparent Verification Tools: Integrate mechanisms for fact-checking and translation comparison.

Context-Aware Responses: Calibrate tone, detail, and explanation depth based on user intent.

Multilingual Confidence Cues: Indicate model reliability for each language to reduce anxiety and mistrust.

User Persona

Storyboard

Next Steps

Develop low-fidelity prototypes for text-entry scaffolds supporting bilingual interaction.

Conduct heuristic evaluation and usability testing to validate design feasibility and cognitive impact.

Explore partnerships with educational or research AI platforms to pilot multilingual prompt assistance.

Keywords

UX Research • Generative AI • Bilingual Interaction • Cognitive Load • Human-AI Collaboration • Text Entry Design