Text Entry Augmentation (TEA) for Multilingual Researchers

Timeline: 16 weeks

Role: HCI Researcher

Designing intelligent text input tools to streamline multilingual academic workflows in Large Language Models

Methods: Survey, CI, Testing

Overview

Context

Graduate HCI research course exploring equity in emerging AI technologies. Focus on supporting non-native English speakers in academic settings.

Problem

Current generative AI tools are optimized for English, creating inequities for multilingual researchers who must constantly translate and adapt their natural communication patterns.

Outome

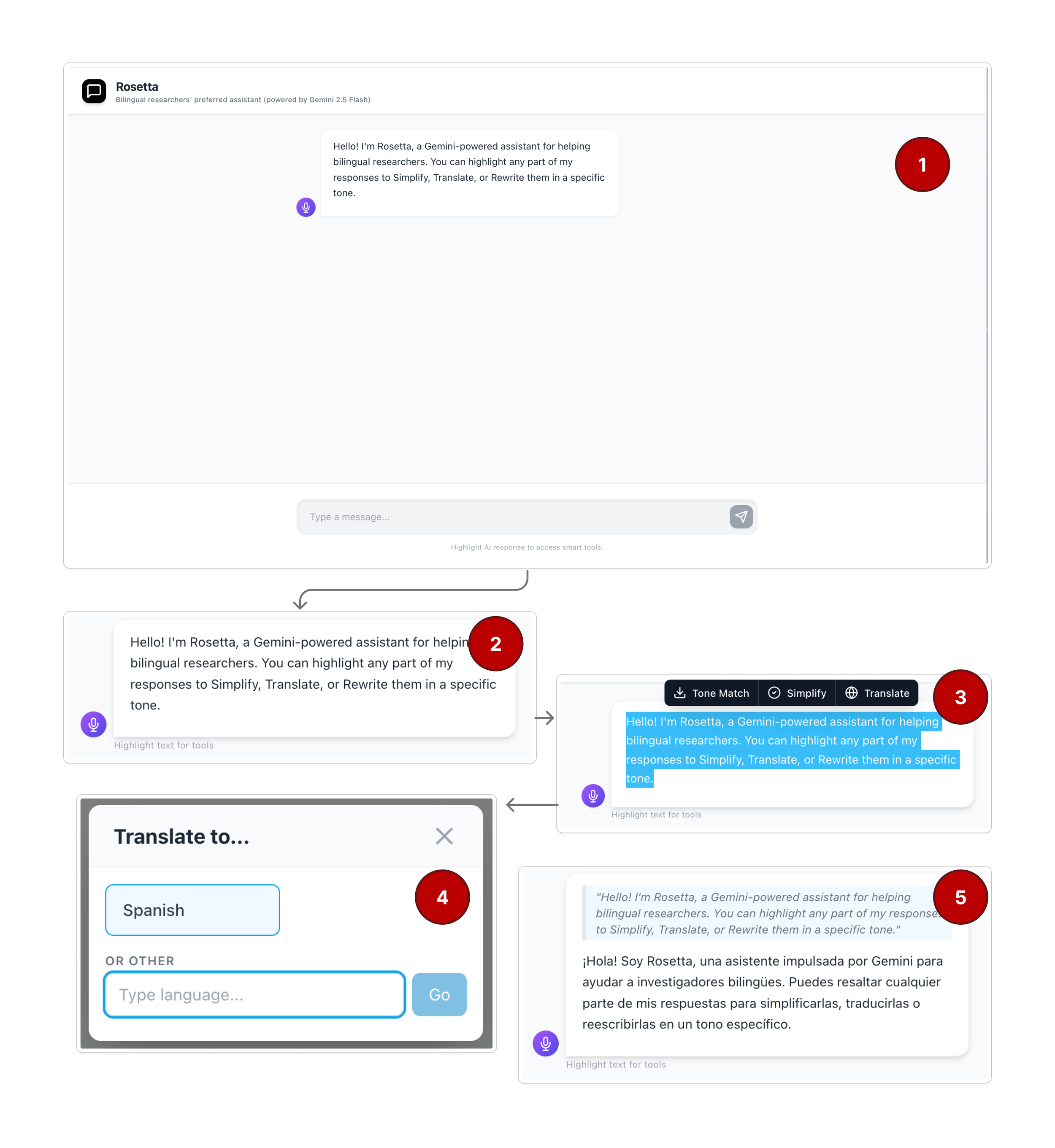

TEA interaction framework and Rosetta prototype: a contextual layer for AI chat interfaces that supports multilingual input and transparent response refinement.

Obstacles

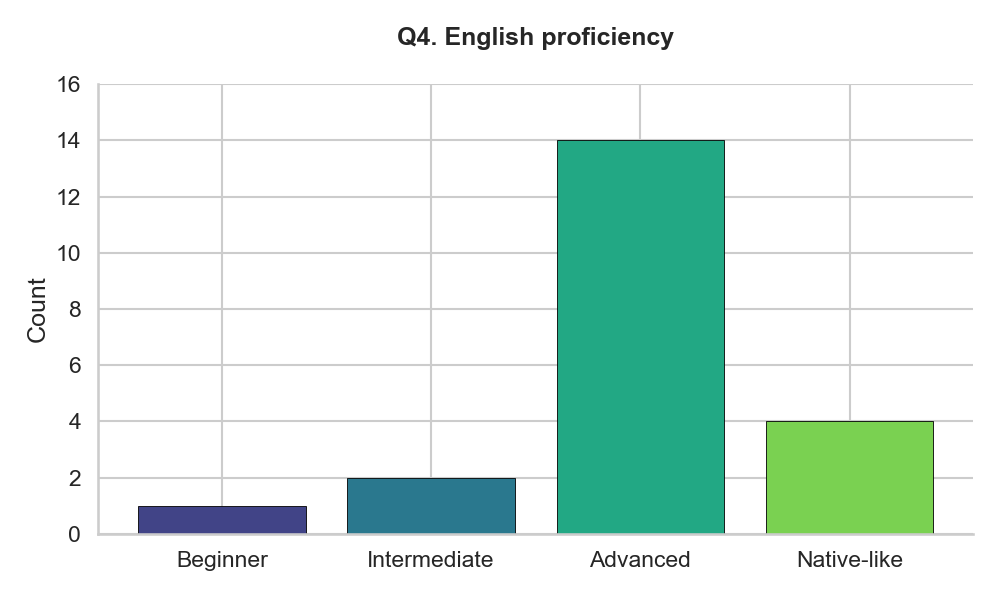

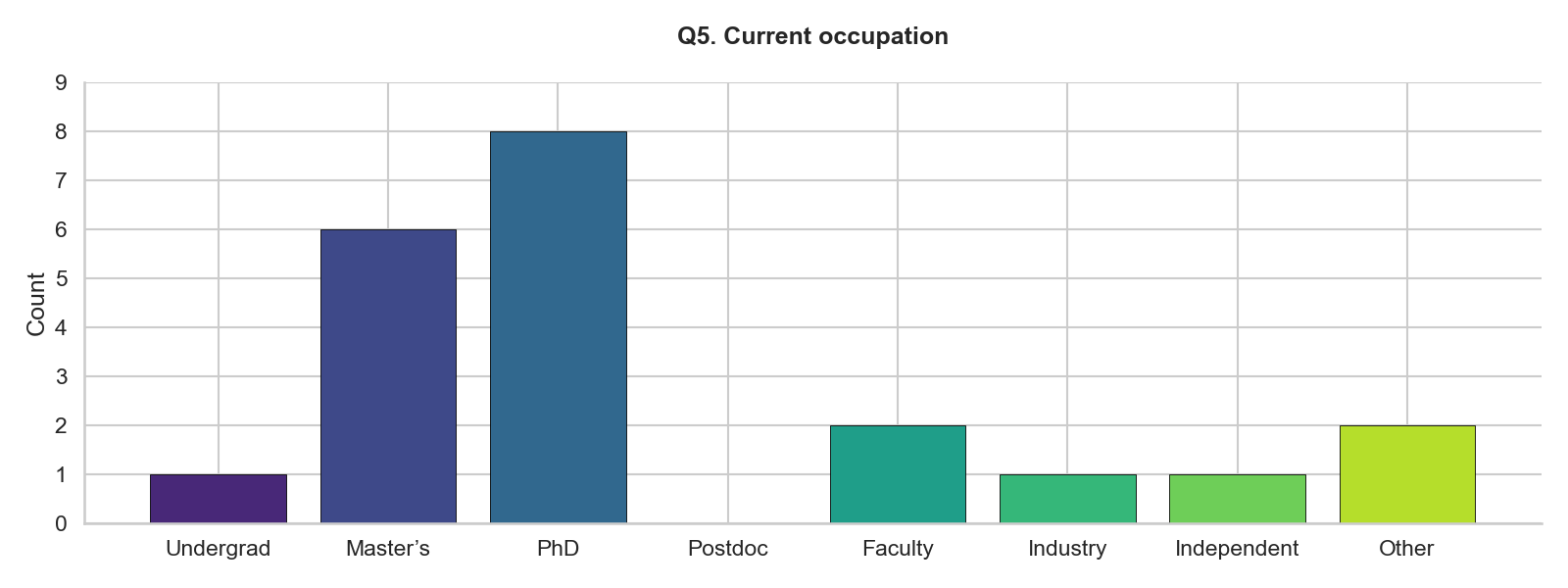

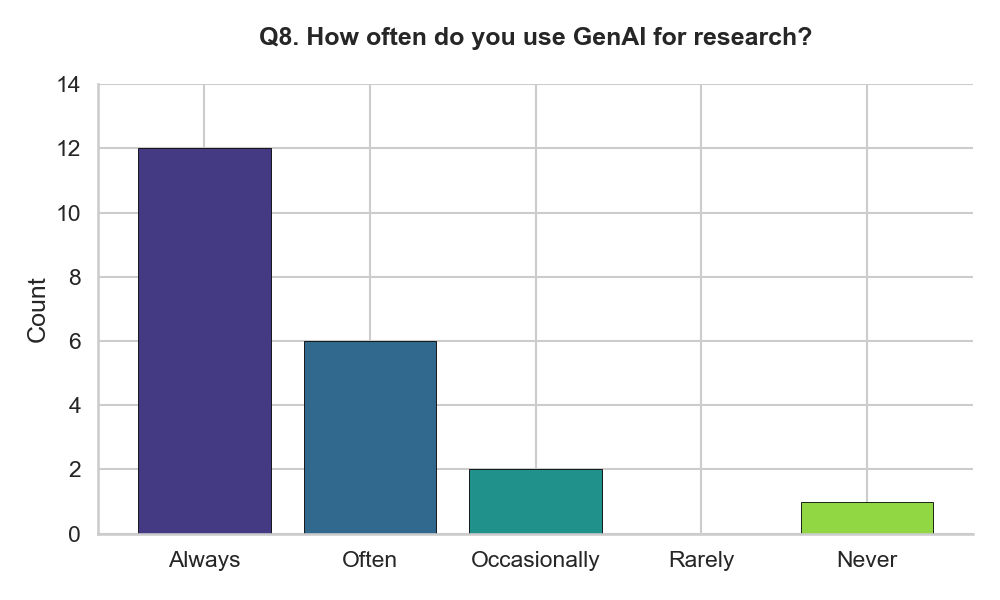

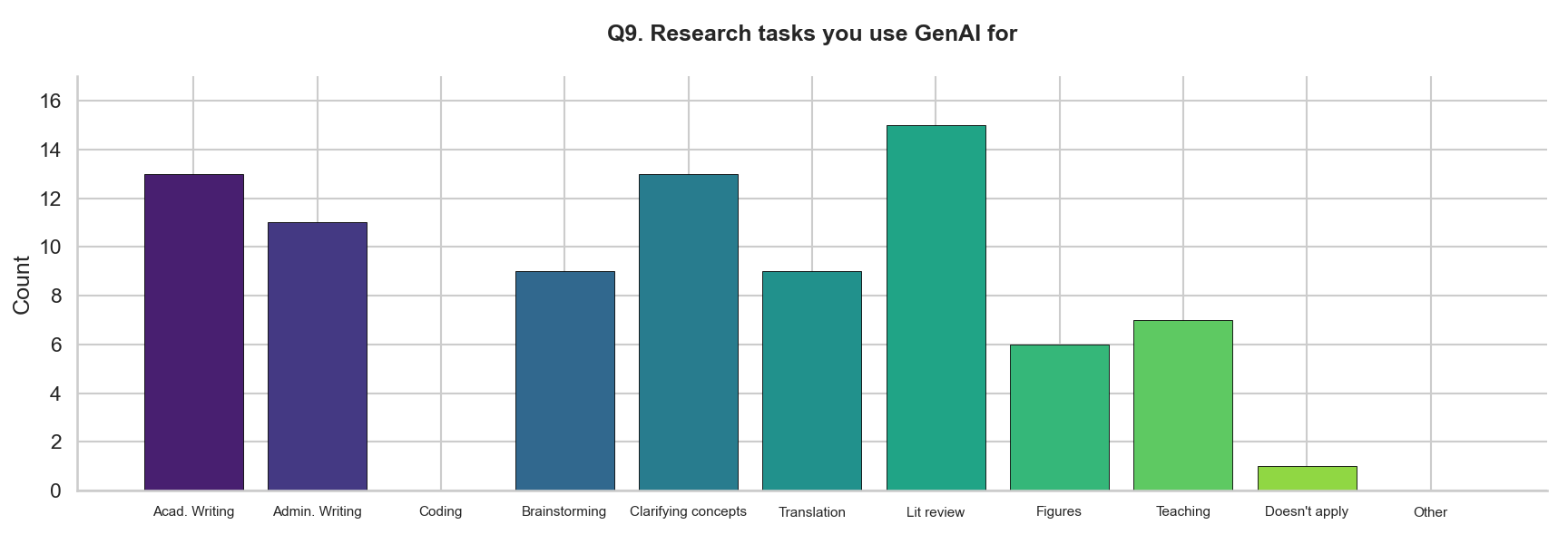

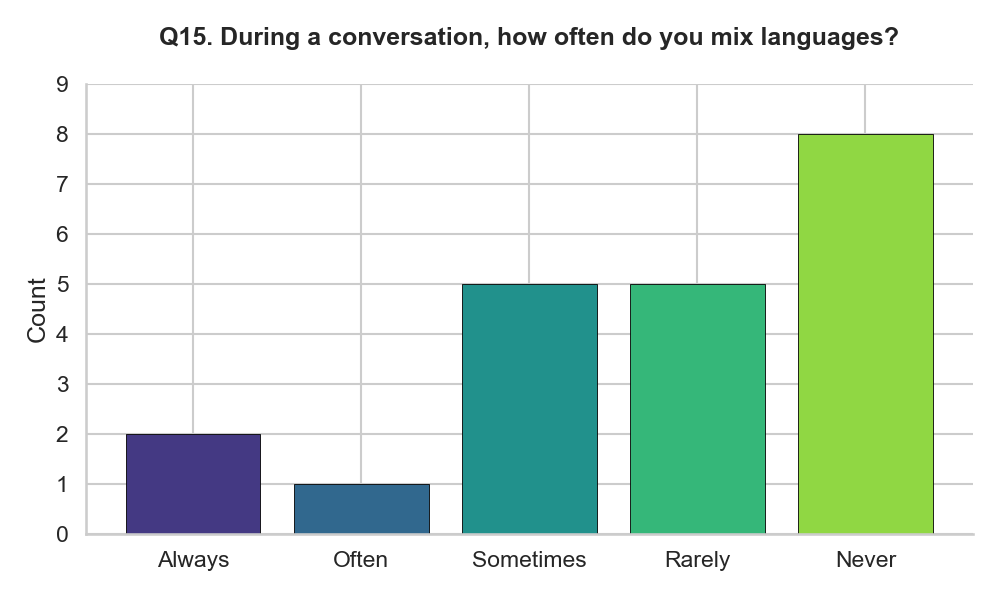

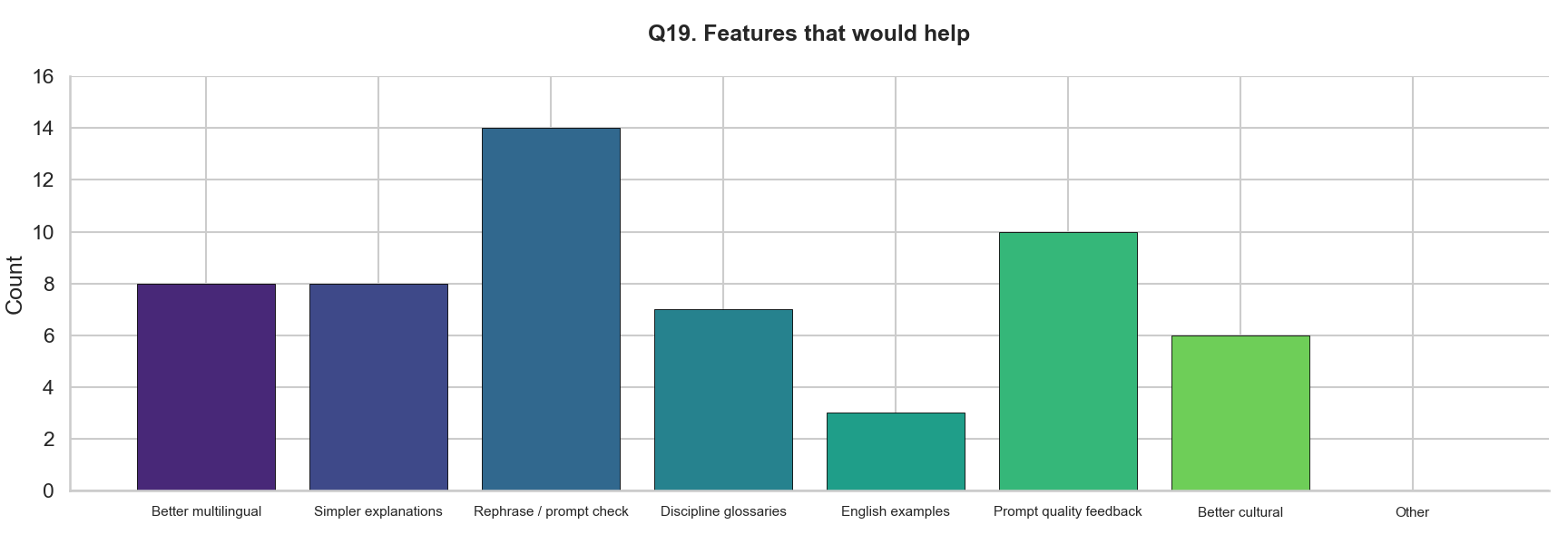

Through our survey of 52 multilingual researchers and contextual inquiries with 12 participants, we identified three interconnected challenges that current AI tools fail to address. These obstacles compound each other, creating a persistent barrier to equitable access for non-native English speakers in academic research contexts.

Language inequality in AI tools

Generative AI models perform significantly better with English prompts, disadvantaging multilingual users who think in other languages.

Cognitive load from switching

Constant translation between thinking language and English input creates mental overhead and disrupts research flow.

Opaque tone and style shifts

AI responses can alter intended meaning or tone in ways that are difficult for non-native speakers to detect and correct.

Methods

Research

✨Survey (n=52) ✨Personas

✨Contextual Inquiry ✨Storyboards

✨Affinity mapping

52 survey respondents, 12 contextual inquiry participants

Evaluation

✨Heuristic evaluation

✨Simplified user testing

✨Controlled experiment (n=8)

8 participants in controlled evaluation with baseline comparison

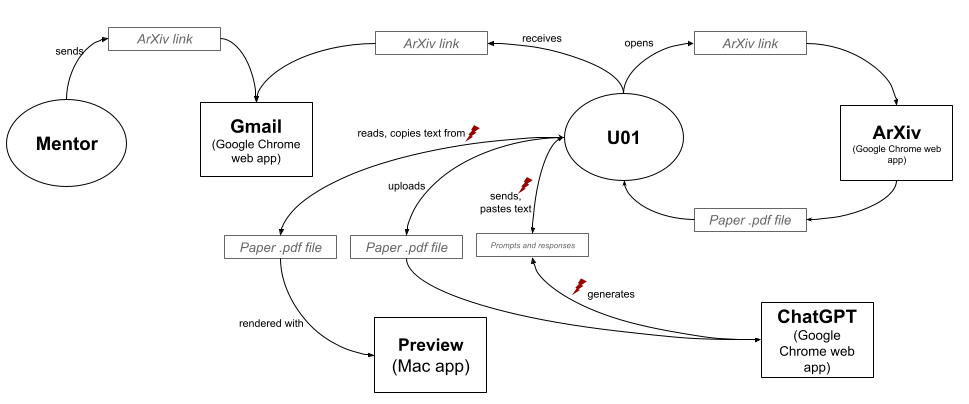

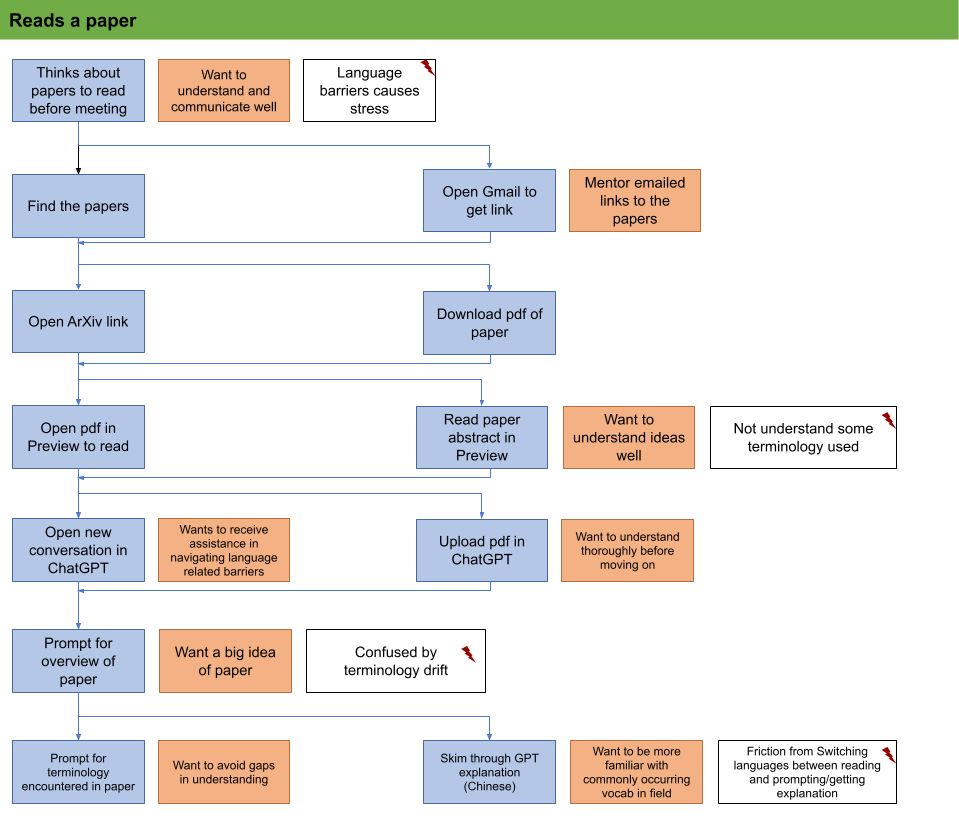

Affinity Diagram & Flow Analysis

Identified recurring breakdowns and user adaptations across participants.

Sequence Diagram & Survey

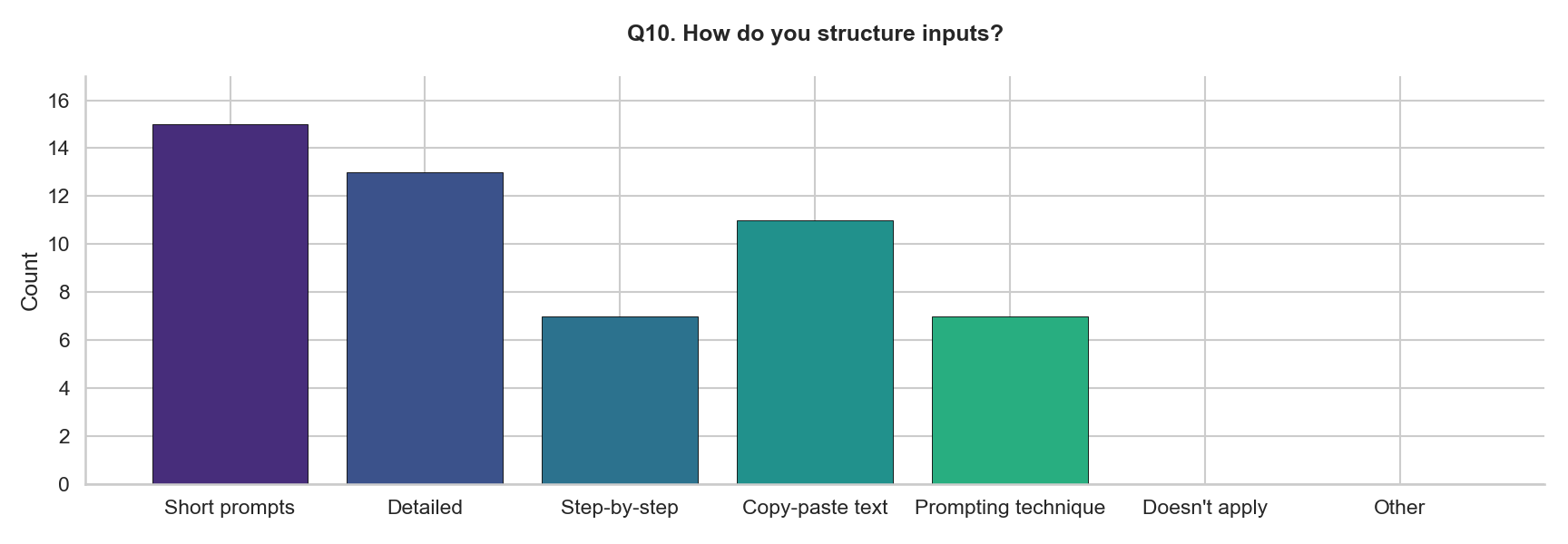

Quantified multilingual AI use patterns, challenges, and coping strategies.

Solution

TEA (Text Entry Augmentation) and the Rosetta prototype provide an interaction layer that sits over standard AI chat interfaces. Rather than replacing the AI model, Rosetta adds contextual support at two critical moments: when crafting prompts and when refining responses. This approach respects existing workflows while reducing language-related friction.

Before the prompt

Highlight any text to open tooltip menu

Simplify: reduce complexity of AI output

Translate: convert to preferred language

Tone Match: adjust formality and style

After the response

Voice input with automatic language detection

Real-time translation preview

Editable prompt with language indicators

Context-aware vocabulary suggestions

Key Findings

What we observed

During usability testing, participants gravitated toward fine-grained controls rather than trying to craft perfect prompts upfront. They frequently highlighted portions of AI responses to adjust tone or clarify meaning, treating the output as a draft to refine rather than a final product. Language switching happened fluidly when visual feedback made it clear which mode was active.

What this means for design

Interaction design for multilingual AI tools should prioritize post-generation refinement over prompt engineering. Users need lightweight, contextual controls that feel like natural editing rather than technical configuration. Visible state indicators aren't just helpful—they're essential for building trust in language-aware systems.

Highlight-based refinement reduces re-prompting

Users preferred editing specific sections over regenerating entire responses, maintaining context while fixing issues.

Language switching needs visible state

Clear indicators of active language mode reduced errors and built confidence in the system's understanding.

Users balance trust with verification

Participants wanted AI assistance but needed transparent ways to verify and adjust tone, style, and meaning.

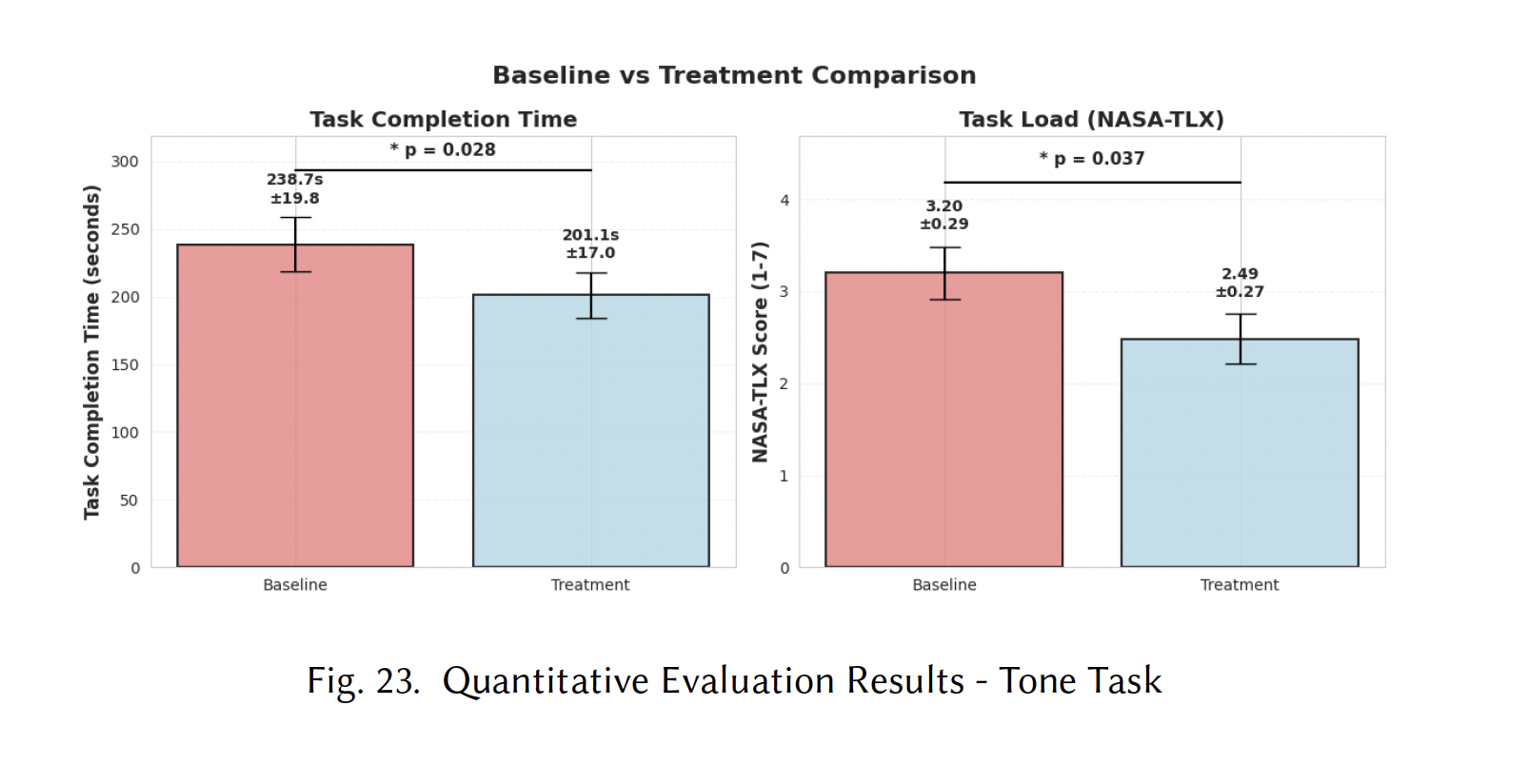

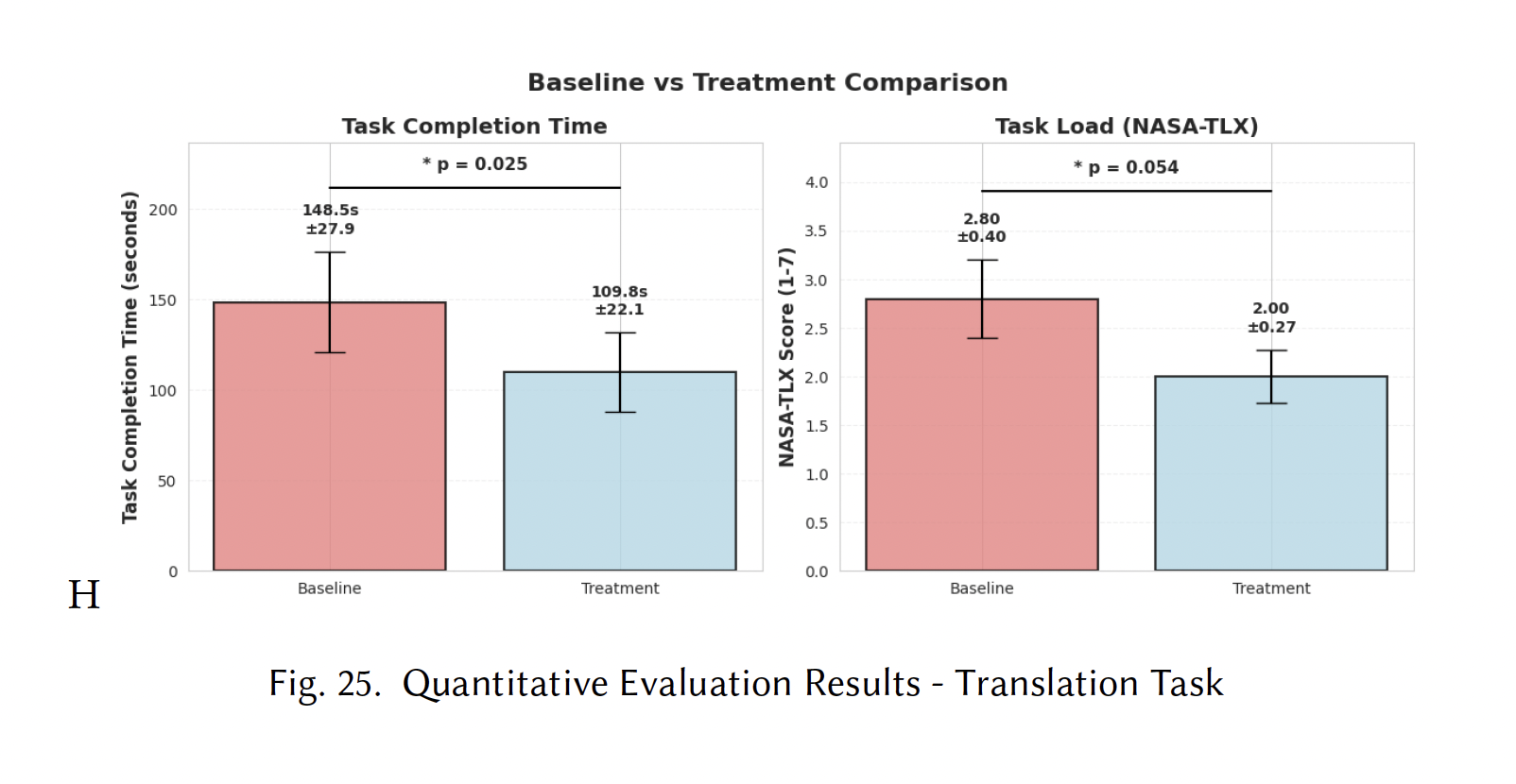

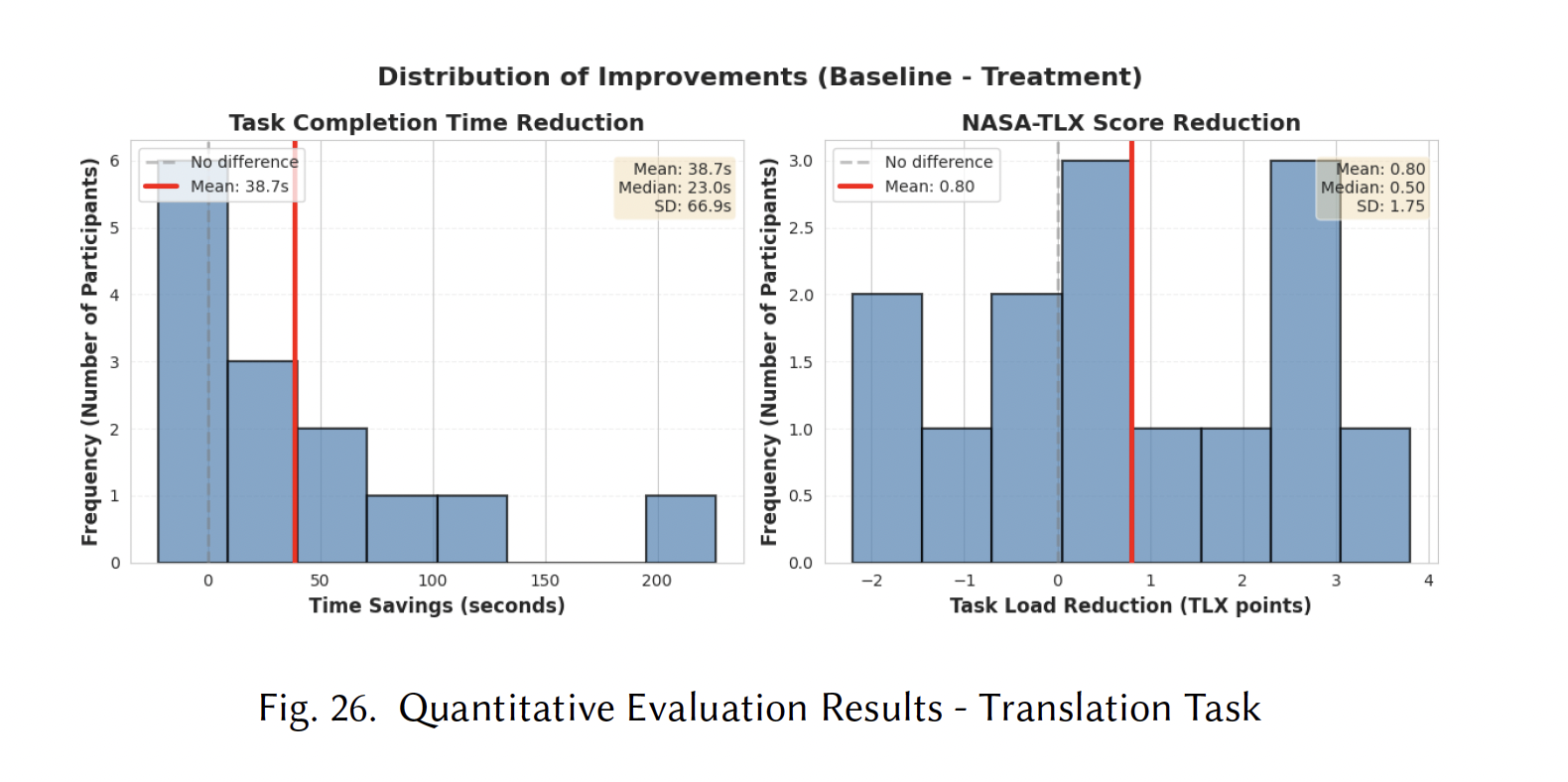

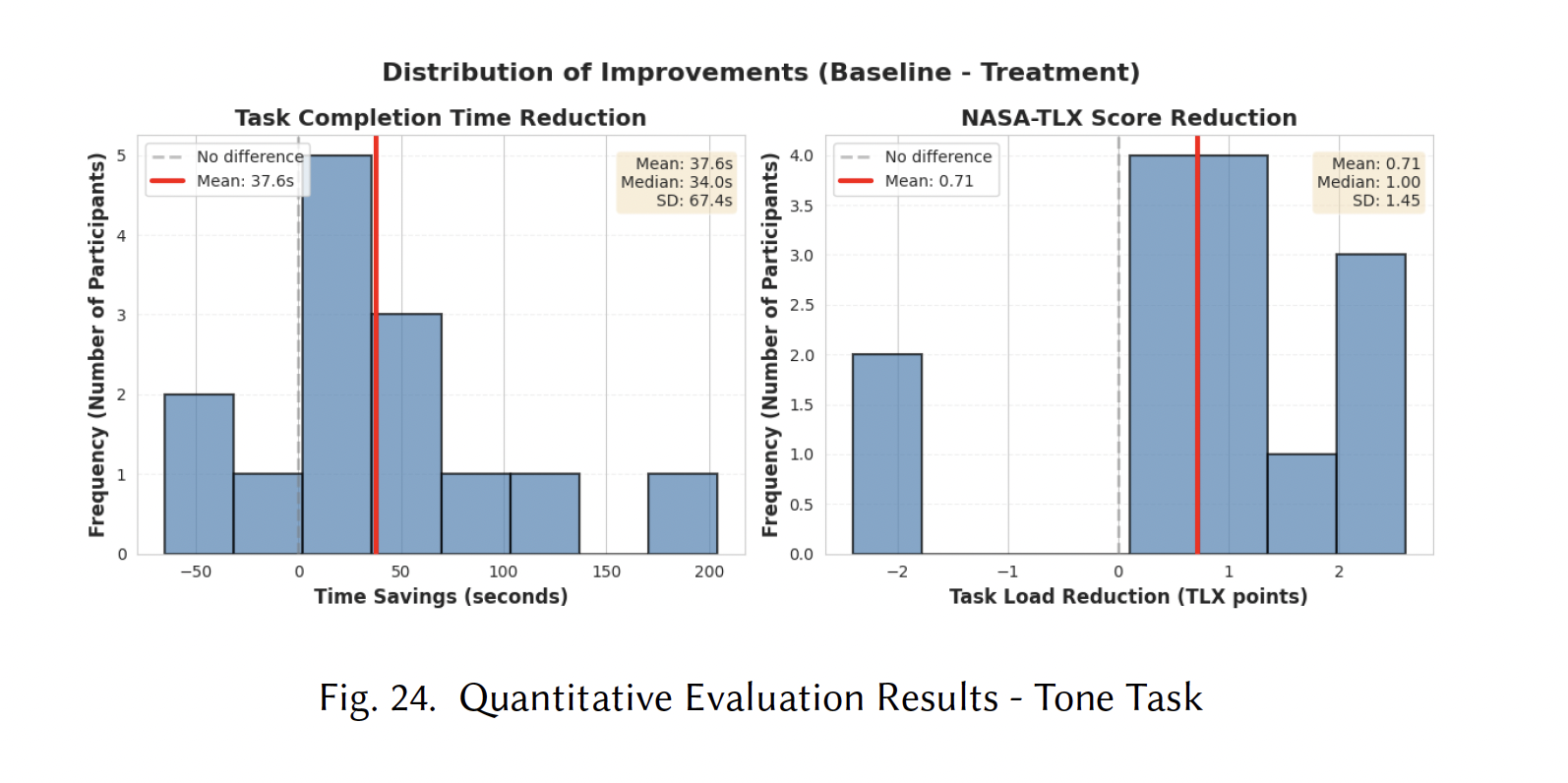

Quantitative results

Task completion

NASA-TLX workload scores

The controlled evaluation (n=8) showed that participants using Rosetta completed multilingual research tasks 32% faster than with a standard AI interface. NASA-TLX scores decreased by an average of 24%, indicating reduced cognitive workload. Most notably, participants reported feeling less anxious about making language mistakes and more confident in their ability to verify AI-generated content.

Takeaways

Interaction can support equity

Thoughtful interaction design can help address systemic inequities in AI tools without requiring model retraining.

Mixed methods strengthen the story

Combining qualitative insights with quantitative metrics validated both user experience and measurable impact.

Local controls matter more than long prompts

Users prefer lightweight, post-generation refinement over crafting complex upfront instructions.

Terminology and feedback shape trust

Clear language about what the system does and visible state indicators are essential for user confidence.

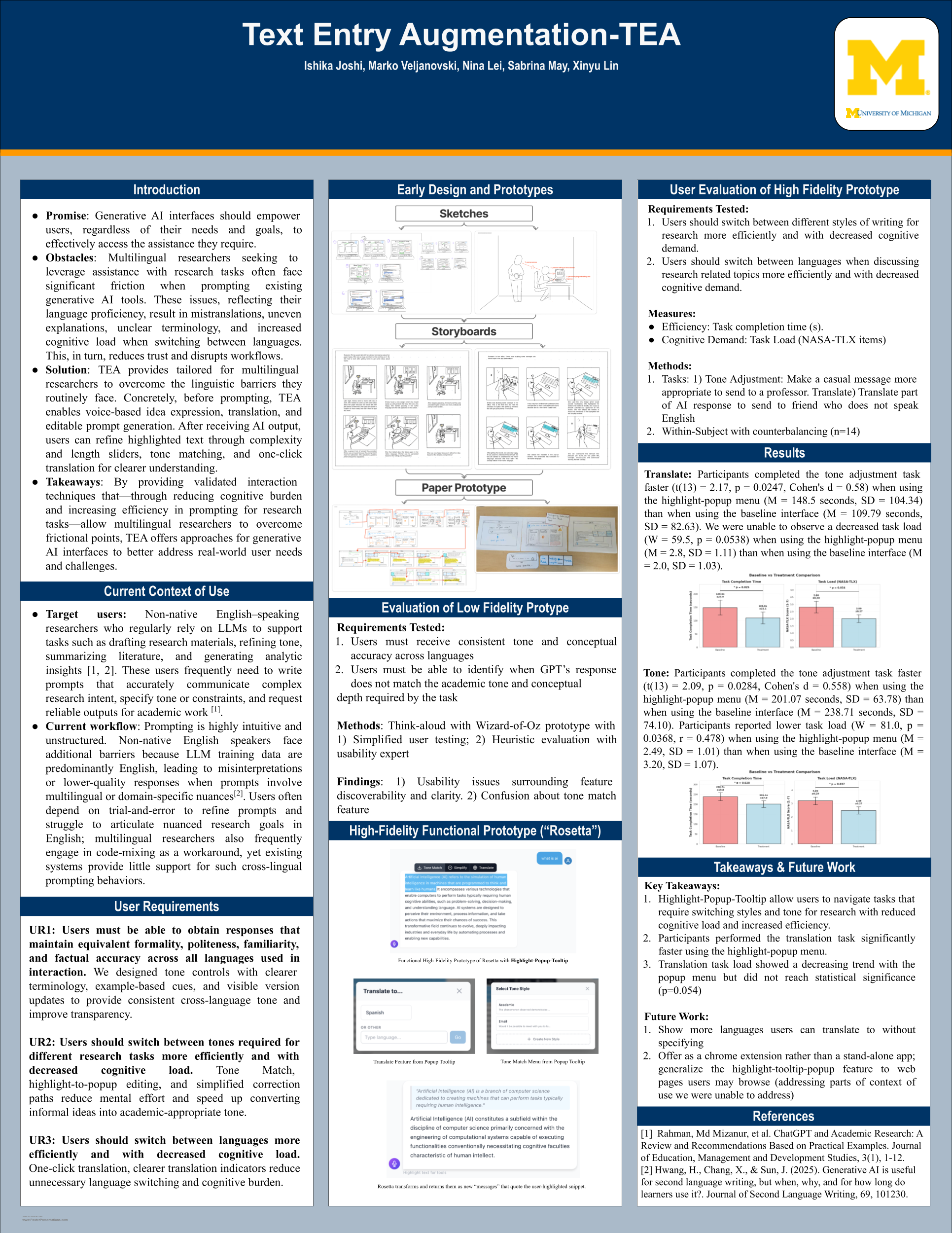

TEA/Rosetta Poster

Figure on the right is the Poster presented at the University of Michigans EECS research poster presentation. In addition to the Poster we provided a high Fidelity prototype.

What I would do next

🪨 Test with additional language pairs, especially those with different writing systems

🪨 Explore voice input more deeply as an alternative to keyboard-based interaction

🪨 Study long-term adoption to see how usage patterns evolve with familiarity

🪨 Partner with academic institutions to pilot the system in real research contexts

What I learned as a researcher

🪨 Early observation beats late iteration—contextual inquiry should come before high-fidelity design

🪨 Quantitative validation strengthens qualitative insights and helps communicate impact

🪨 Designing for equity requires designing with, not for, marginalized users

🪨 Small, focused studies can drive meaningful insight when participants are carefully selected

💡Reflection

This project taught me that equity in AI isn't just about model fairness, it's about the entire interaction ecosystem. I learned to recognize my own assumptions as a native English speaker and to design from lived experiences that weren't my own. The moments when participants lit up because the system "understood" their language-switching patterns reinforced why user research matters. I also gained confidence in mixed-methods approaches: the qualitative insights gave us direction, while the quantitative validation gave us credibility. Looking back, I would have started contextual inquiry earlier in the process, as those observations surfaced nuances that surveys couldn't capture. This work has shaped how I think about inclusive design—not as accommodation, but as fundamental to good design.